Many experiments involve the (quasi-)random selection of both participants and items. Westfall et al. (2014) provide a Shiny-app for power-calculations for five different experimental designs with selections of participants and items. Here I want to present my own Shiny-app for planning for precision of contrast estimates (for the comparison of up to four groups) in these experimental designs. The app can be found here: https://gmulder.shinyapps.io/precision/

(Note: I have taken the code of Westfall's app and added code or modified existing code to get precision estimates in stead of power; so, without Westfall's app, my own modified version would never have existed).

The plan for this post is as follows. I will present the general theoretical background (mixed model ANOVA combined with ideas from Generalizability Theory) by considering comparing three groups in a counter balanced design.

Note 1: This post uses mathjax, so it's probably unreadable on mobile devices. Note: a (tidied up) version (pdf) of this post can be downloaded here: download the pdf

Note 2: For simulation studies testing the procedure go here: https://the-small-s-scientist.blogspot.nl/2017/05/planning-for-precision-simulation.html

Note 3: I use the terms stimulus and item interchangeably; have to correct this to make things more readable and comparable to Westfall et al. (2014).

Note 4: If you do not like the technical details you can skip to an illustration of the app at the end of the post.

The general idea

The focus of planning for precision is to try to minimize the half-width of a 95%-confidence interval for a comparison of means (in our case). Following Cumming's (2012) terminology I will call this half-width the Margin of Error (MOE). The actual purpose of the app is to find required sample sizes for participants and items that have a high probability ('assurance') of obtaining a MOE of some pre-specified value.

Expected MOE for a contrast

For a contrast estimate $\hat{\psi}$ we have the following expression for the expected MOE. $$E(MOE) = t_{.975}(df)*\sigma_{\hat{\psi}},$$ where $\sigma_{\hat{\psi}}$ is the standard error of the contrast estimate. Of course, both the standard error and the df are functions of the sample sizes.

For the standard error of a contrast with contrast weights $c_i$ through $c_a$, where a is the number of treatment conditions, we use the following general expression.

$$\sigma_{\hat{\psi}} = \sqrt{\sum c^2_i \frac{\sigma^2_w}{n}},$$ where n is the per treatment sample size (i.e. the number of participants per treatment condition times the number of items per treatment condition) and $\sigma^2_w$ the within treatment variance (we assume homogeneity of variance).

For a simple example take an independent samples design with n = 20 participants responding to 1 item in one of two possible treatment conditions (this is basically the set up for the independent t-test). Suppose we have contrast weights $c_1 = 1$ and $c_2 = -1$, and $\sigma^2_w = 20$, the standard error for this contrast equals $\sigma_{\hat{\psi}} = \sqrt{\sum c^2_i \frac{\sigma^2_w}{n}} = \sqrt{2*20/20} = \sqrt{2}$. (Note that this is simply the standard error of the difference between two means as used in the independent samples t-test).

In this simple example, df is the total sample size (N = n*a) minus the number of treatment conditions (a), thus $df = N - a = 38$. The expected MOE for this design is therefore, $E(MOE) = t_{.975}(38)*\sqrt{2} = 2.0244*1.4142 = 2.8629$. Note that using these figures entails that 95% of the contrast estimates will take values between the true contrast value plus and minus the expected MOE: $\psi \pm 2.8629$.

For the three groups case, and contrast weights {$1, -1/2, -1/2$}, the same sample sizes and within treatment variance gives $E(MOE) = t_{.975}(57)*\sqrt{1.5*\frac{20}{20}} = 2.4525$.

(If you like, I've written a little document with derivation of the variance of selected contrast estimates in the fully crossed design for the comparison of two and three group means. That document can be found here: https://drive.google.com/open?id=0B4k88F8PMfAhaEw2blBveE96VlU)

The focus of planning for precision is to try to find sample sizes that minimize expected MOE to a certain target MOE. The app uses an optimization function that minimizes the squared difference between expected MOE and target MOE to find the optimal (minimal) sample sizes required.

In order to plan with assurance, we need (an approximation of) the sampling distribution of MOE. In the ANOVA approach that underlies the app, this boils down to the distribution of estimates of $\sigma^2_w$: $$MS_w \sim \sigma^2_w*\chi^2(df)/df, $$ thus $$\hat{MOE} \sim t_{.975}(df)\sqrt{\frac{1}{n}\sum{c_i^2}\sigma^2_w*\chi^2(df)/df}.$$

In terms of the two-groups independent samples design above: the expected MOE equals 2.8629. But, with df = 38, there is an 80% probability (assurance) that the estimated MOE will be no larger than: $$\hat{MOE}_{.80} = 2.0244 * \sqrt{1/20*2*20*45.07628/38} = 3.1181.$$ Note that the 45.07628 is the quantile $q_{.80}$ in the chi-squared (df = 38) distribution. That is $P(\chi^2(38) \leq 45.07628) = .80$.

The app let's you specify a target MOE and a value for the desired assurance ($\gamma$) and will find the combination of number of participants and items that will give an estimated MOE no larger than target MOE in $\gamma$% of the replication experiments.

We are planning to use a counterbalanced design with a number of participants equal to p and a sample of items of size q. In the design we randomly assign participants to a groups, where a is the number of conditions, and randomly assign items to a lists (see Westfall et al., 2014 for more details about this design). All the groups are exposed to all lists of stimuli, but the groups are exposed to different lists in each condition. The number of group by list combinations equals $a^2$, and the number of observations in each group by list combination equals $\frac{1}{a^2}pq$. The condition means are estimated by combining a group by list combinations each of which composed of different participants and stimuli. The total number of observations per condition is therefore, $\frac{a}{a^2}pq = \frac{1}{a}pq$.

$$Y_{ijk} = \mu + \alpha_i + \beta_j + \gamma_k + (\alpha\beta)_{ij} + (\alpha\gamma)_{ik} + e_{ijk},$$

where the effect $\alpha_i$ is a constant treatment effect (it's a fixed effect), and the other effect are random effects with zero mean and variances $\sigma^2_\beta$ (participants), $\sigma^2_\gamma$ (items), $\sigma^2_{\alpha\beta}$ (person by treatment interaction), $\sigma^2_{\alpha\gamma}$ (item by treatment interaction) and $\sigma^2_e$ (error variance confounded with the person by item interaction). Note: in Table 1 below, $\sigma^2_e$ is (for technical reasons not important for this blogpost) presented as this confounding $[\sigma^2_{\beta\gamma} + \sigma^2_e]$.

We make use of the following restrictions (Sahai & Ageel, 2000): $\sum_{i = 1}^a = 0$, and $\sum_{i=1}^a(\alpha\beta)_{ij} = \sum_{i=1}^a(\alpha\gamma)_{ik} = 0$. The latter two restrictions make the interaction-effects correlated across conditions (i,e. the effects of person and treatment are correlated across condition for the same person, likewise the interaction effects of item and treatment are correlated across conditons for the same item. Interaction effects of different participants and items are uncorrelated). The covariances between the random effects $\beta_j, \gamma_i, (\alpha\beta)_{ij}, (\alpha\gamma)_{ik}, e_{ijk}$ are assumed to be zero.

Under this model (and restrictions) $E((\alpha\beta)^2) = \frac{a - 1}{a}\sigma^2_{\alpha\beta}$, and $E((\alpha\gamma)^2) = \frac{a - 1}{a}\sigma^2_{\alpha\gamma}$. Furthermore, the covariance of the interactions between treatment and participant or between treatment and item for the same participant or item are $-\frac{1}{a}\sigma^2_{\alpha\beta}$ for participants and $-\frac{1}{a}\sigma^2_{\alpha\gamma}$ for items.

The expected within treatment variance can be found in the Treatment row in Table 1. It is comprised of all the components to the right of the component associated with the treatment effect ($\theta^2_a$). Thus, $\sigma^2_w = \frac{q}{a}\sigma^2_{\alpha\beta} + \frac{p}{a}\sigma^2_{\alpha\gamma}+[\sigma^2_{\beta\gamma} + \sigma^2_e]$. Note that the latter equals the sum of the expected mean squares of the Treatment by Participant ($E(MS_{tp})$) and the Treatment by Item ($E(MS_{ti})$) interactions, minus the expected mean square associated with Error ($E(MS_e)$).

$$df =\frac{(E(MS_{tp}) + E(MS_{ti}) - E(MS_e)^2}{\frac{E(MS_{tp}))^2}{(a - 1)(p-a)}+\frac{E(MS_{ti})^2}{(a - 1)(q-a)}+\frac{E(MS_e)^2}{(p-a)(q-a)}}$$

For the standard error of a contrast with contrast weights $c_i$ through $c_a$, where a is the number of treatment conditions, we use the following general expression.

$$\sigma_{\hat{\psi}} = \sqrt{\sum c^2_i \frac{\sigma^2_w}{n}},$$ where n is the per treatment sample size (i.e. the number of participants per treatment condition times the number of items per treatment condition) and $\sigma^2_w$ the within treatment variance (we assume homogeneity of variance).

For a simple example take an independent samples design with n = 20 participants responding to 1 item in one of two possible treatment conditions (this is basically the set up for the independent t-test). Suppose we have contrast weights $c_1 = 1$ and $c_2 = -1$, and $\sigma^2_w = 20$, the standard error for this contrast equals $\sigma_{\hat{\psi}} = \sqrt{\sum c^2_i \frac{\sigma^2_w}{n}} = \sqrt{2*20/20} = \sqrt{2}$. (Note that this is simply the standard error of the difference between two means as used in the independent samples t-test).

In this simple example, df is the total sample size (N = n*a) minus the number of treatment conditions (a), thus $df = N - a = 38$. The expected MOE for this design is therefore, $E(MOE) = t_{.975}(38)*\sqrt{2} = 2.0244*1.4142 = 2.8629$. Note that using these figures entails that 95% of the contrast estimates will take values between the true contrast value plus and minus the expected MOE: $\psi \pm 2.8629$.

For the three groups case, and contrast weights {$1, -1/2, -1/2$}, the same sample sizes and within treatment variance gives $E(MOE) = t_{.975}(57)*\sqrt{1.5*\frac{20}{20}} = 2.4525$.

(If you like, I've written a little document with derivation of the variance of selected contrast estimates in the fully crossed design for the comparison of two and three group means. That document can be found here: https://drive.google.com/open?id=0B4k88F8PMfAhaEw2blBveE96VlU)

The focus of planning for precision is to try to find sample sizes that minimize expected MOE to a certain target MOE. The app uses an optimization function that minimizes the squared difference between expected MOE and target MOE to find the optimal (minimal) sample sizes required.

Planning with assurance

If the expected MOE is equal to target MOE, the sample estimate of MOE will be larger than your target MOE in 50% of replication experiments. This is why we plan with assurance (terminology from Cumming, 2012). For instance, we may want to have a 95% probability (95% assurance) that the estimated MOE will not exceed our target MOE.In order to plan with assurance, we need (an approximation of) the sampling distribution of MOE. In the ANOVA approach that underlies the app, this boils down to the distribution of estimates of $\sigma^2_w$: $$MS_w \sim \sigma^2_w*\chi^2(df)/df, $$ thus $$\hat{MOE} \sim t_{.975}(df)\sqrt{\frac{1}{n}\sum{c_i^2}\sigma^2_w*\chi^2(df)/df}.$$

In terms of the two-groups independent samples design above: the expected MOE equals 2.8629. But, with df = 38, there is an 80% probability (assurance) that the estimated MOE will be no larger than: $$\hat{MOE}_{.80} = 2.0244 * \sqrt{1/20*2*20*45.07628/38} = 3.1181.$$ Note that the 45.07628 is the quantile $q_{.80}$ in the chi-squared (df = 38) distribution. That is $P(\chi^2(38) \leq 45.07628) = .80$.

The app let's you specify a target MOE and a value for the desired assurance ($\gamma$) and will find the combination of number of participants and items that will give an estimated MOE no larger than target MOE in $\gamma$% of the replication experiments.

The mixed model ANOVA approach

Basically, what we need to plan for precision is to able to specify $\sigma^2_w$ and the degrees of freedom. We will specify $\sigma^2_w$ as a function of variance components and use the Satterthwaite procedure to approximate the degrees of freedom by means of a linear combination of expected mean squares. I will illustrate the approach with a three-treatment conditions counterbalanced design.A description of the design

Suppose we are interested in estimating the differences between three group means. We formulate two contrasts: one contrast estimates the mean difference between the first group and the average of the means of the second and third groups. The weights of the contrasts are respectively {1, -1/2, -1/2}, and {0, 1, -1}.We are planning to use a counterbalanced design with a number of participants equal to p and a sample of items of size q. In the design we randomly assign participants to a groups, where a is the number of conditions, and randomly assign items to a lists (see Westfall et al., 2014 for more details about this design). All the groups are exposed to all lists of stimuli, but the groups are exposed to different lists in each condition. The number of group by list combinations equals $a^2$, and the number of observations in each group by list combination equals $\frac{1}{a^2}pq$. The condition means are estimated by combining a group by list combinations each of which composed of different participants and stimuli. The total number of observations per condition is therefore, $\frac{a}{a^2}pq = \frac{1}{a}pq$.

The ANOVA model

The ANOVA model for this design is$$Y_{ijk} = \mu + \alpha_i + \beta_j + \gamma_k + (\alpha\beta)_{ij} + (\alpha\gamma)_{ik} + e_{ijk},$$

where the effect $\alpha_i$ is a constant treatment effect (it's a fixed effect), and the other effect are random effects with zero mean and variances $\sigma^2_\beta$ (participants), $\sigma^2_\gamma$ (items), $\sigma^2_{\alpha\beta}$ (person by treatment interaction), $\sigma^2_{\alpha\gamma}$ (item by treatment interaction) and $\sigma^2_e$ (error variance confounded with the person by item interaction). Note: in Table 1 below, $\sigma^2_e$ is (for technical reasons not important for this blogpost) presented as this confounding $[\sigma^2_{\beta\gamma} + \sigma^2_e]$.

We make use of the following restrictions (Sahai & Ageel, 2000): $\sum_{i = 1}^a = 0$, and $\sum_{i=1}^a(\alpha\beta)_{ij} = \sum_{i=1}^a(\alpha\gamma)_{ik} = 0$. The latter two restrictions make the interaction-effects correlated across conditions (i,e. the effects of person and treatment are correlated across condition for the same person, likewise the interaction effects of item and treatment are correlated across conditons for the same item. Interaction effects of different participants and items are uncorrelated). The covariances between the random effects $\beta_j, \gamma_i, (\alpha\beta)_{ij}, (\alpha\gamma)_{ik}, e_{ijk}$ are assumed to be zero.

Under this model (and restrictions) $E((\alpha\beta)^2) = \frac{a - 1}{a}\sigma^2_{\alpha\beta}$, and $E((\alpha\gamma)^2) = \frac{a - 1}{a}\sigma^2_{\alpha\gamma}$. Furthermore, the covariance of the interactions between treatment and participant or between treatment and item for the same participant or item are $-\frac{1}{a}\sigma^2_{\alpha\beta}$ for participants and $-\frac{1}{a}\sigma^2_{\alpha\gamma}$ for items.

Within treatment variance

In order to obtain an expectation for MOE, we take the expected mean squares to get an expression or the expected within treatment variance $\sigma^2_w$. These expected means squares are presented in Table 1.The expected within treatment variance can be found in the Treatment row in Table 1. It is comprised of all the components to the right of the component associated with the treatment effect ($\theta^2_a$). Thus, $\sigma^2_w = \frac{q}{a}\sigma^2_{\alpha\beta} + \frac{p}{a}\sigma^2_{\alpha\gamma}+[\sigma^2_{\beta\gamma} + \sigma^2_e]$. Note that the latter equals the sum of the expected mean squares of the Treatment by Participant ($E(MS_{tp})$) and the Treatment by Item ($E(MS_{ti})$) interactions, minus the expected mean square associated with Error ($E(MS_e)$).

Degrees of freedom

The second ingredient we need in order to obtain expected MOE are the degrees of freedom that are used to estimate the within treatment variance. In the ANOVA approach the within treatment variance is estimated by a linear combination of mean squares (as described in the last sentence of the previous section. This linear combination is also used to obtain approximate degrees of freedom using the Satterthwaite procedure:$$df =\frac{(E(MS_{tp}) + E(MS_{ti}) - E(MS_e)^2}{\frac{E(MS_{tp}))^2}{(a - 1)(p-a)}+\frac{E(MS_{ti})^2}{(a - 1)(q-a)}+\frac{E(MS_e)^2}{(p-a)(q-a)}}$$

Expected MOE

(Note: I can't seem to get mathjax to generate align environments or equation arrays, so the following is ugly; Note to self: next time use R-studio or Lyx to generate R-html or an equivalent format).The expected value of MOE for the contrasts in the counter balanced design is:

$$E(MOE) = t(df)*\sqrt{(\sum_{i=1}^a c^2_i)(\frac{1}{a}pq)^{-1}\sigma^2_w}$$

$$= t(df)*\sqrt{(\sum_{i=1}^a c^2_i)(\frac{1}{a}pq)^{-1}(\frac{q}{a}\sigma^2_{\alpha\beta} + \frac{p}{a}\sigma^2_{\alpha\gamma}+[\sigma^2_{\beta\gamma} + \sigma^2_e])}$$

$$=t(df)*\sqrt{(\sum_{i=1}^a c^2_i)(pq)^{-1}(q\sigma^2_{\alpha\beta} + p\sigma^2_{\alpha\gamma}+a[\sigma^2_{\beta\gamma} + \sigma^2_e])}$$

$$=t(df)*\sqrt{(\sum_{i=1}^a c^2_i)(\sigma^2_{\alpha\beta}/p + \sigma^2_{\alpha\gamma}/q +a[\sigma^2_{\beta\gamma} + \sigma^2_e]/pq)}$$

$$=t(df)*\sqrt{(\sum_{i=1}^a c^2_i)(pq)^{-1}(q\sigma^2_{\alpha\beta} + p\sigma^2_{\alpha\gamma}+a[\sigma^2_{\beta\gamma} + \sigma^2_e])}$$

$$=t(df)*\sqrt{(\sum_{i=1}^a c^2_i)(\sigma^2_{\alpha\beta}/p + \sigma^2_{\alpha\gamma}/q +a[\sigma^2_{\beta\gamma} + \sigma^2_e]/pq)}$$

Finally an example

Suppose we the scores in three conditions are normally distributed with (total) variances $\sigma^2_1 = \sigma^2_2 = \sigma^2_3 = 1.0$. Suppose furthermore, that 10% of the variance can be attributed to treatment by participant interaction, 10% of the variance to the treatment by item interaction and 40% of the variance to the error confounded with the participant by item interaction. (which leaves 40% of the total variance attributable to participant and item variance.Thus, we have $\sigma^2_{tp} = E((\alpha\beta)^2) = .10$, $\sigma^2_{ti} = E((\alpha\gamma)^2) = .10$, and $\sigma^2_e = .40$. Our target MOE is .25, and we plan to use the counterbalanced design with p = 30 participants, and q = 15 items (stimuli).

Due to the model restrictions presented above we have $\sigma^2_{\alpha\beta} = \frac{a}{a - 1}\sigma^2_{tp} = \frac{3}{2}*.10 = .15$, $\sigma^2_{\alpha\gamma} = .15$, and $\sigma^2_e = .40$.

The value of $\sigma^2_w$ is therefore, $5*.15 + 10*.15 +.40 = 2.65$, and the approximate df equal $df = (2.65^2) / ((5*.15 + .40)^2/(2*27) + (10*.15+.40)^2/(2*12) + .40^2/(27*12)) = 74.5874$.

For the first contrast, with weights {1, -1/2. -1/2}, then, the Expected value for the Margin of Error is $E(MOE) = t_{.975}(74.5874)*\sqrt{(1.5 * (.15/30 + .15/15 + 3*.40/(30*15))} = 0.3243$.

For the second contrast, with weights {0, 1, -1}, the Expected value of the Margin of Error is $t_{.975}(74.5874)*\sqrt{(2*(.15/30 + .15/15 + 3*.40/(30*15))} = 0.3745$

Thus, using p = 30 participants, and q = 15 items (stimuli) will not lead to an expected MOE larger than the target MOE of .25.

We can use the app to find the required number of participants and items for a given target MOE. If the number of groups is larger than two, the app uses the contrast estimate with the largest expected MOE to calculate the sample sizes (in the default setting the one comparing only two group means). The reasoning is that if the least precise estimate (in terms of MOE) meets our target precision, the other ones meet our target precision as well.

Using the app

I've included lot' of comments in the app itself, but please ignore references to a manual (does not exist, yet, except in Dutch) or an article (no idea whether or not I'll be able to finish the write-up anytime soon). I hope the app is pretty straightforward. Just take a look at https://gmulder.shinyapps.io/precision/, but the basic idea is:- Choose one of five designs

- Supply the number of treatment conditions

- Specify contrast(weights) (or use the default ones)

- Supply target MOE and assurance

- Supply values of variance components (read (e,g,) Westfall, et al, 2014, for more details).

- Supply a number of participants and items

- Choose run precision analysis with current values or

- Choose get sample sizes. (The app gives two solutions: one minimizes the number of participants and the other minimizes the number of stimuli/items). NOTE: the number of stimuli is always greater than or equal to 10 and the number of participants is always greater than or equal to 20.

An illustration

Take the example above. Out target MOE equals .25, and we want insurance of .80 to get an estimated MOE of no larger than .25. We use a counter-balanced design with three conditions, and want to estimate two contrasts: one comparing the first mean with the average of means two and three, and the other contrast compares the second mean with the third mean. We can use the default contrasts.

For the variance components, we use the default values provided by Westfall et al. (2014) for the variance components. These are also the default values in the app (so we don't need to change anything now).

Let's see what happens when we propose to use p = 30 participants and q = 15 items/stimuli.

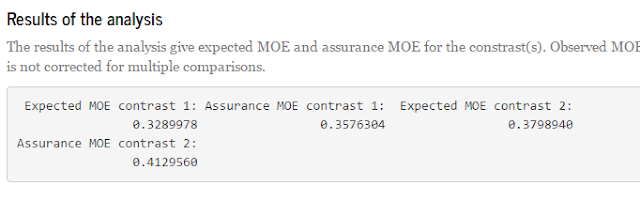

Here is part of a screenshot from the app:

These results show that the expected MOE for the first contrast (comparing the first mean with the average of the other means) equals 0.3290, and assurance MOE for the same contrasts equals 0.3576. Remember that we specified the assurance as .80. So, this means that 80% of the replication experiments give estimated MOE as large as or smaller than 0.3576. But we want that to be at most 0.2500. Thus, 30 participants and 15 items do suffice for our purposes.

Let's use to app to get sample sizes. The results are as follows.

If we input the suggest sample sizes in the app, we see the following results if we choose the run precision analysis with current values.

As you can see: Assurance MOE is close to 0.25 (.24) for the second contrast (the least precise one), so 80% of replication experiments will get estimated MOE of 0.25 (.24) or smaller. The expected precision is 0.22. The first contrast (which can be estimated with more precision) has assurance MOE of 0.21 and expected MOE of approximately 0.19. Thus, the sample sizes lead to the results we want.

References

Cumming, G. (2012). Understanding the New Statistics. New York/London: Routledge.

Sahai, H., & Ageel, M. I. (2000). The analysis of variance. Fixed, Random, and Mixed Models. Boston/Basel/Berlin: Birkhäuser.

Sahai, H., & Ageel, M. I. (2000). The analysis of variance. Fixed, Random, and Mixed Models. Boston/Basel/Berlin: Birkhäuser.

Westfall, J., Kenny, D. A., & Judd, C. M. (2014). Statistical power and optimal design in experiments in which samples of participants respond to samples of stimuli. Journal of Experimental Psychology: General, 143(5), 2020-2045.

No comments:

Post a Comment